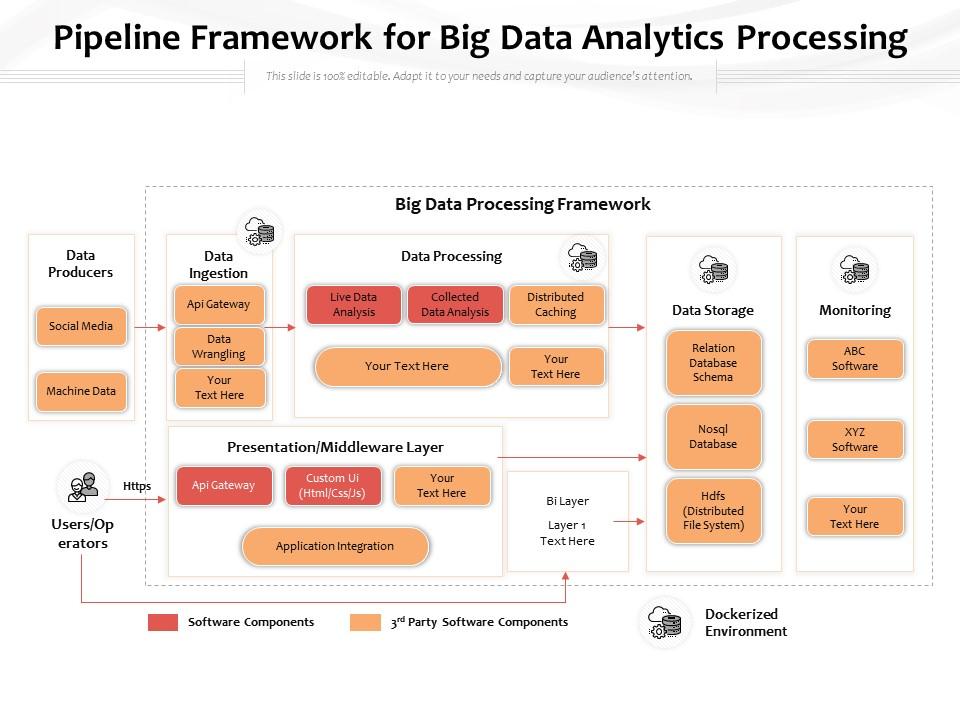

Big Data Processing Frameworks

Big data processing frameworks open the door to a world of possibilities, revolutionizing the way we handle and analyze massive amounts of data. Dive into the realm of advanced technology and innovation as we explore the key components and functionalities of these powerful frameworks.

From Hadoop to Spark to Flink, each framework offers unique advantages and capabilities that propel data processing to new heights. Join us on this journey of discovery and learn how organizations leverage these frameworks to drive insights and make informed decisions in today’s data-driven landscape.

Overview of Big Data Processing Frameworks

Big data processing frameworks are essential tools that enable organizations to analyze, process, and derive insights from large volumes of data. These frameworks are designed to handle the immense scale and complexity of big data, providing the necessary infrastructure and algorithms to efficiently process and extract valuable information.

Key Characteristics of Effective Big Data Processing Frameworks

- Scalability: Effective frameworks should be able to scale horizontally to handle increasing data volumes.

- Fault Tolerance: The ability to recover from failures and continue processing without data loss is crucial for reliability.

- Performance: Speed and efficiency in processing data are key characteristics of effective big data frameworks.

- Flexibility: The framework should support various data types and processing tasks to accommodate diverse use cases.

Types of Big Data Processing Frameworks

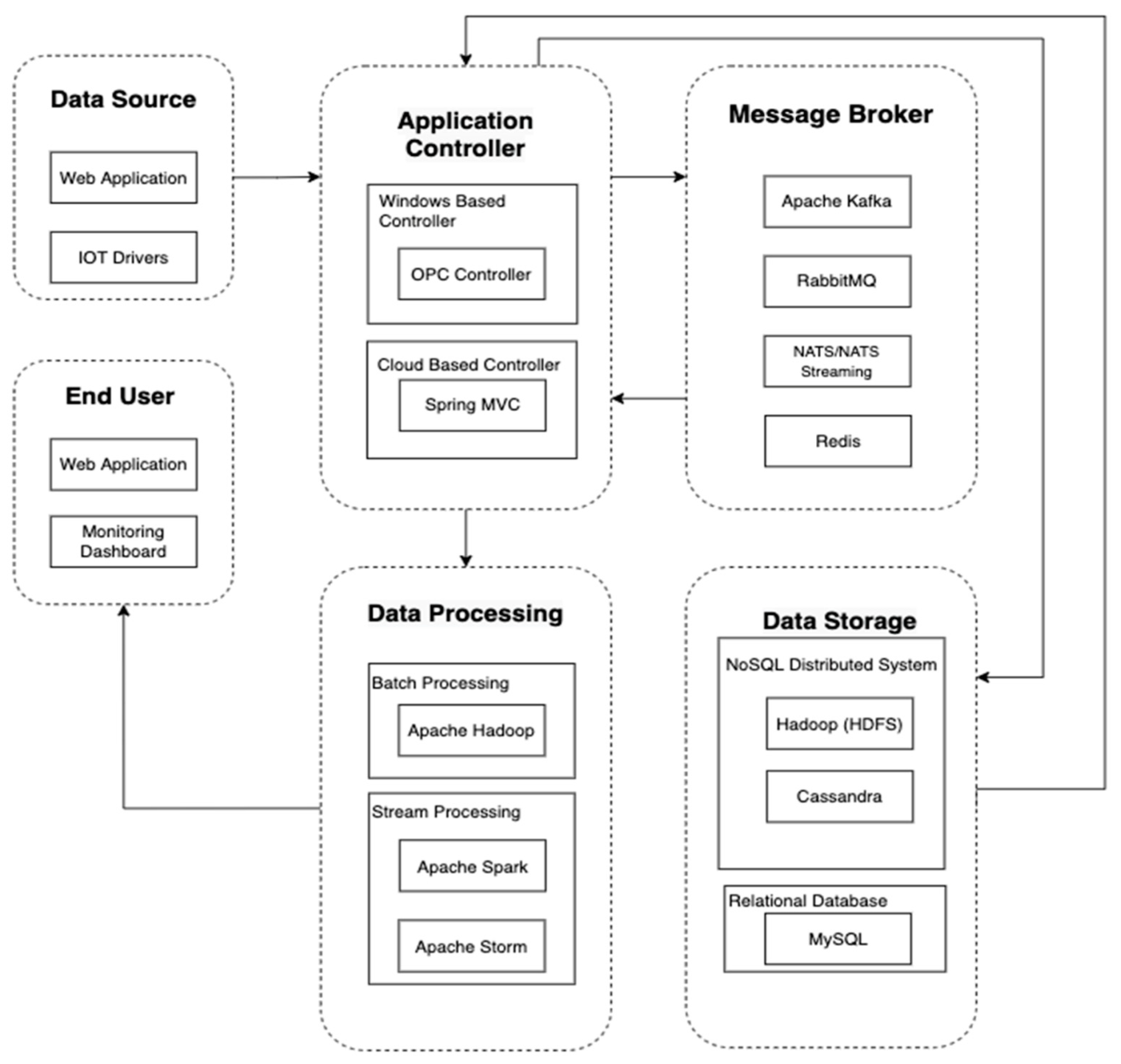

- Batch Processing Frameworks: Examples include Apache Hadoop and Apache Spark, which are optimized for processing large volumes of data in batches.

- Stream Processing Frameworks: Tools like Apache Flink and Apache Kafka are designed for processing real-time data streams with low latency.

- Hybrid Processing Frameworks: Some frameworks combine batch and stream processing capabilities to offer a comprehensive solution for diverse data processing needs.

Hadoop Framework

Apache Hadoop is an open-source software framework for distributed storage and processing of large datasets using a cluster of commodity hardware.

Architecture of Hadoop

The Hadoop framework consists of the following key components:

- Hadoop Distributed File System (HDFS): A distributed file system that stores data across multiple machines.

- Yet Another Resource Negotiator (YARN): A resource management layer for scheduling and managing resources in the cluster.

- MapReduce: A programming model for processing and generating large-scale data sets.

Role of Hadoop in Processing Big Data

Hadoop plays a crucial role in processing big data by providing a scalable and reliable framework for storing, processing, and analyzing large volumes of data in a distributed environment.

Use Cases of Hadoop

Hadoop is commonly used in various industries and use cases, such as:

- Financial Services: Analyzing financial transactions and detecting fraud.

- Healthcare: Processing and analyzing large volumes of medical data for research and patient care.

- Retail: Analyzing customer data for personalized marketing and recommendations.

- Telecommunications: Processing call detail records and network data for optimization and fraud detection.

Spark Framework

Apache Spark is a powerful big data processing framework known for its speed, ease of use, and versatility. It provides a unified analytics engine for big data processing, with built-in modules for streaming, SQL, machine learning, and graph processing. Spark offers advantages that set it apart from other frameworks in the industry.

Advantages of Spark Framework

Spark is designed for speed and efficiency, making it ideal for processing large volumes of data in a timely manner. The framework leverages in-memory processing, which allows it to perform computations faster than disk-based systems like Hadoop. This speed advantage is crucial for real-time data processing applications where timely insights are essential.

Spark also provides a user-friendly API in multiple languages like Java, Scala, and Python, enabling developers to write applications quickly and easily. Its compatibility with Hadoop data sources and libraries makes it seamless to integrate with existing big data ecosystems, providing a smooth transition for organizations already using Hadoop.

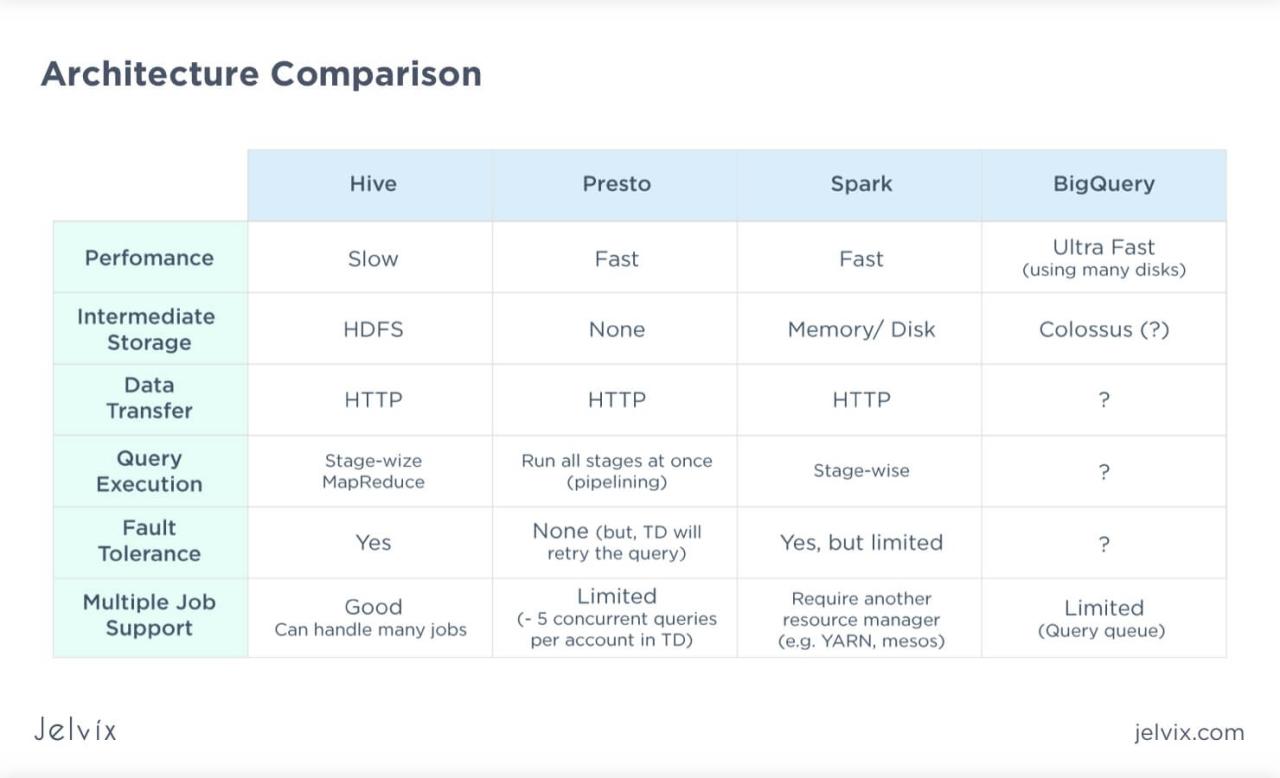

Performance Comparison with Other Frameworks

When comparing Spark with other big data processing frameworks like Hadoop MapReduce, Spark consistently outperforms in terms of speed and efficiency. Spark’s ability to cache data in memory and optimize task execution leads to faster processing times and improved performance overall. This advantage becomes even more pronounced when dealing with iterative algorithms or complex analytics tasks.

Additionally, Spark’s fault tolerance mechanism and ability to recover from failures quickly ensure reliable processing of large datasets without compromising on performance. This resilience sets Spark apart from other frameworks that may struggle with handling failures gracefully.

Real-time Data Processing Capabilities

One of Spark’s key strengths lies in its real-time data processing capabilities, particularly through its Spark Streaming module. Spark Streaming enables continuous processing of live data streams with low latency, making it suitable for applications requiring real-time analytics or immediate responses to incoming data.

By leveraging micro-batch processing and windowed computations, Spark Streaming allows for real-time processing of data streams with high throughput and fault tolerance. This makes Spark a popular choice for use cases such as real-time fraud detection, IoT data processing, and monitoring systems that require instant insights from streaming data.

Flink Framework

Flink is a powerful open-source data processing framework known for its high performance and low latency processing capabilities. It is designed to efficiently handle both batch and streaming data processing tasks.

Key Features of Flink Framework, Big data processing frameworks

- Support for both batch processing and real-time stream processing

- Advanced APIs for data manipulation and analytics

- Efficient fault tolerance mechanisms

- Native support for event time processing

- Dynamic scaling to adapt to changing workloads

Data Streaming and Batch Processing in Flink

Flink excels in handling both data streaming and batch processing tasks seamlessly. It processes data streams in real-time with low latency, making it ideal for applications requiring immediate insights from continuous data streams. On the other hand, Flink’s batch processing capabilities ensure efficient processing of large volumes of data in a parallel and distributed manner.

Scalability and Fault Tolerance in Flink

- Flink offers excellent scalability by allowing users to scale their processing jobs horizontally by adding more resources as needed.

- It implements robust fault tolerance mechanisms, such as checkpointing and automatic recovery, to ensure data consistency and fault resiliency.

- With Flink’s distributed processing model, users can rely on its fault tolerance features to handle failures gracefully without compromising data integrity.

Data Processing with Apache Storm

Apache Storm is a powerful framework that enables real-time processing of big data, providing a scalable and fault-tolerant solution for processing massive amounts of data streams.

Apache Storm Architecture and Components

Apache Storm follows a master-slave architecture, consisting of Nimbus (master node) and multiple Supervisor nodes (worker nodes). Nimbus is responsible for distributing code across the cluster, while Supervisor nodes execute the tasks assigned by Nimbus. Additionally, Apache Storm includes the following components:

- Spouts: Responsible for ingesting data streams into the Apache Storm cluster.

- Bolts: Perform processing tasks on the ingested data streams.

- Topology: Represents the overall data processing flow, consisting of interconnected spouts and bolts.

Companies Utilizing Apache Storm

Many leading companies across various industries leverage Apache Storm for real-time data processing. For example, Twitter uses Apache Storm for processing tweets in real-time, ensuring timely updates and analytics for their users. Similarly, Uber utilizes Apache Storm to handle real-time data streams related to ride requests, driver locations, and passenger updates, optimizing their operations and improving user experience.

FAQ Explained

What are the key characteristics of effective big data processing frameworks?

Effective big data processing frameworks should offer scalability, fault tolerance, real-time processing capabilities, and seamless integration with various data sources.

How does Apache Storm facilitate real-time processing of big data?

Apache Storm enables real-time processing through its distributed and fault-tolerant architecture, allowing for continuous data streams to be processed efficiently.

What industries commonly use Hadoop for processing big data?

Industries such as e-commerce, finance, healthcare, and telecommunications often utilize Hadoop for processing large volumes of data and gaining valuable insights.

What sets Spark apart from other big data processing frameworks in terms of performance?

Spark’s in-memory processing and optimized computing engine contribute to its high performance, making it ideal for handling complex data processing tasks efficiently.